I argue that sophisticated embodied robots will employ conceptual schemes that are radically different to our own, resulting in what might be described as "alien intelligence". Here I introduce the ideas of embodied robotics and conceptual relativity, and consider the implications of their combination for the future of artificial intelligence. This argument is intended as a practical demonstration of a broader point: our interaction with the world is fundamentally mediated by the conceptual frameworks with which we carve it up.

Embodied Robotics

In contrast with the abstract, computationally demanding solutions favoured by classical AI, Rodney Brooks has long advocated what he describes as a "behavior-based" approach to artificial intelligence. This revolves around the incremental development of relatively autonomous subsystems, each capable of performing only a single, simple task, but combining together to produce complex behaviour. Rather than building internal representations of the world, his subsystems take full advantage of their environment in solving tasks. This is captured in Brooks' famous maxim, "The world is its own best model" (1991a: 167).

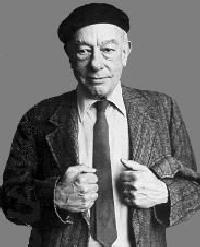

[PICTURE OF ALLEN]

A simple example of this approach is the robot "Allen", designed by Brooks in the late 1980s. Allen contained three subsystems, one to avoid objects, one to initiate a "wandering" behaviour, and one to direct the robot towards distant places. None of the subsystems connect to a central processor, but instead take control when circumstances make it necessary. So if in the process of moving towards a distant point an obstacle looms, the first subsystem will take over from the third, and manoeuvre the robot around the obstacle. Together these subsystems produce a flexible and seemingly goal-oriented behaviour, at little computational cost. (1986; 1990: 118-20.)

Whilst initially his creations were quite basic, Brooks' approach has shown promise, and it seems plausible to suggest that embodied robotics could eventually result in sophisticated, even intelligent, agents. If it does, these agents are unlikely to replicate precisely human behaviour. Brooks has stated openly that he has "no particular interest in demonstrating how human beings work" (1991b: 86), and his methodology relies on taking whatever solutions seem to work in the real world, regardless of how authentic they might be in relation to human cognition. It is for this reason that I think embodied robotics is a particularly interesting test-case for the idea of conceptual relativity.

Conceptual Relativity

A concept, as I understand it, is simply any category that we use to divide up the world. In this respect I differ from those who restrict conceptual ability to language-using humans, although I do acknowledge that linguistic ability allows for a distinctly broad range of conceptual categories. To make this distinction clear we could refer to the non-linguistic concepts possessed by infants, non-human animals, and embodied robots as proto-concepts - I don't really think it matters so long as everybody is on the same page.

Conceptual relativity is simply the idea that the concepts available to us (our "conceptual scheme") might literally change the way that we perceive the world. Taken to its logical extreme, this could result in a form of idealism or relativism, but more moderately we can simply acknowledge that our own perceptual world is not epistemically privileged, and that other agents might experience things very differently.

[HUMAN PARK/BEE PARK]

Consider a typical scene: a park with a tree and a bench. For us it makes most sense to say that there are two objects in this scene, although if pushed we might admit that these objects can be further decomposed. For a bee, on the other hand, the flowers on the tree are likely to be the most important features of the environment, and the bench might not even register at all. Our conceptual schemes shape our perceptual experience.

Alien Intelligence

Brooks (1991b: 166) describes how an embodied robot divides the world according to categories that reflect the task, or tasks, for which it was designed. My claim is that this process of categorisation constitutes the creation of a conceptual scheme that might differ radically from our own. Allen, introduced in the first section, inhabits a world that consists solely of "desirable", far-away objects and "harmful", nearby objects.

[ALLEN'S WORLD/RODNEY'S WORLD]

A more sophisticated embodied robot, which we might call Rodney, could inhabit a correspondingly more sophisticated world. Assume that Rodney has been designed to roam the streets and apprehend suspected criminals, whom he identifies with an advanced facial recognition device. Rodney is otherwise similar Allen - he has an object avoidance subsystem and a "wandering" subsystem. Rodney divides human-shaped features of his environment into two conceptual categories: "criminal" and "other". He completely ignores the latter category, and we could imagine that they don't even enter into his perceptual experience. His world consists of attention grabbing criminal features, objects to be avoided, and very little else.

Of course, there's no guarantee that the embodied robotics program will ever achieve the kind of results that we would be willing to describe as intelligent, or even if it does, that such intelligences will be radically non-human. A uniquely human conceptual apparatus might turn out to be an essential component of higher-order cognition. Despite this possibility, I feel that so long as a behaviour-based strategy is pursued, it is likely that embodied robots will develop conceptual schemes that are alien to our own, with a resulting impact on their perception and understanding of the world.

References

- Brooks, R. 1986. "A Robust Layered Control System for a Mobile Robot." IEEE Journal of Robotics and Automation RA-2: 14-23. Reprinted in Brooks 1999: 3-26.

- Brooks, R. 1990. "Elephants Don't Play Chess." Robotics and Autonomous Systems 6: 3-15. Reprinted in Brooks 1999: 111-32.

- Brooks, R. 1991a. "Intelligence Without Reason." Proceedings of the 1991 International Joint Conference on Artificial Intelligence: 569-95. Reprinted in Brooks 1999: 133-86.

- Brooks, R. 1991b. "Intelligence Without Representation." Artificial Intelligence Journal 47: 139-60. Reprinted in Brooks 1999: 79-102.

- Brooks, R. 1999. Cambrian Intelligence. Cambridge, MA: MIT Press.