I want to begin fleshing out an argument I've been mulling

over. It’s far from a comprehensive thesis. Rather, I want to use this blog to

sketch out some preliminary ideas. The argument takes

off from the notion that whether or not animals are conscious informs the

importance of human-animal interaction and dictates the course of animal ethics.

|

| A hedgehog struggling to remain conscious... |

I want to explore the idea that treating animals as if they

are conscious carries moral weight from the perspective of a cost-benefit

analysis. The “wager argument” starts with the premise that we have a choice to

treat animals either as if they are conscious or as if they are not. I will

assume for now that consciousness includes the capacity to feel physical and

emotional sensations, such as pain and pleasure, from a familiar first-person

perspective (I’m strategically evading the problem of defining consciousness

for now but I’m fully aware of its spectre- see below).

|

| Animal's wagering. Not what I'm talking about. |

The argument looks something like

this: you are better off treating animals as if they are conscious beings,

because if they are indeed conscious beings you have done good, but if they are

not conscious beings then you have lost nothing. Alternatively, if you treat

animals as if they are not conscious, and they are, you have caused harm. It is

better to hedge your bet and assume animals are conscious.

|

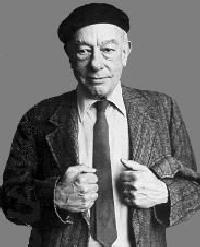

| Pascal. I'll wager he Blaised his way through academia... (sorry). |

Here's the argument in boring step-by-step premises:

P1 An animal is a being that is conscious or is not conscious.

P2 We may treat an animal as if they are conscious or as if they are not conscious.

P3 Treating a conscious being as if it is conscious or as if it is not conscious bares morally significant differences.

P4 Treating an animal as if it is not conscious and it is conscious will (practically) bare morally significant harm.

P5 Treating an animal as if is not conscious and it is not conscious will bare no morally significance difference.

P6 Treating an animal as if it conscious and it is not conscious will bare no or negligible morally significant difference.

P7 Treating an animal as if it conscious and it is conscious will (practically) bare morally significant good- or at the very least will bare no moral significance.

P8 We ought to behave in a way that promotes morally significant good, or at least avoids morally significant harm.

C We ought to treat animals as if they are conscious.

Note that by “practically” I mean that it does not necessarily follow as a logical result, but follows as a real-world likelihood.

The argument assumes that whether we think an animal is conscious or not makes a big difference to the way we ought to treat them. It also assumes that treating them as not conscious will lead to harm. How we flesh out "harm" is going to depend on our moral framework, and I think this argument most obviously fits into a consequentialist paradigm.

Regardless I think the idea pretty intuitive. If you believe your dog has the capacity for physical and emotional sensation, you are likely to treat her differently than if you think her experience of the world is much the same as a banana. Within medical testing, we may afford those animals we believe to be reasonably attributed consciousness with greater caution regarding harmful experiments. We may altogether exclude conscious beings from butchery, or at least any practice that might be painful. More radically, we may believe that any being we regard as conscious should be afforded the same sort of moral attention as humans. What matters is a “significant difference”- and this needs examined.

The premises obviously need to be elaborated upon, and I

already have my own serious criticisms. Two in particular stand out: the

problem of treating consciousness as simple and binary; and the assumption in

premise 6 that treating animals as if they are conscious, when in fact they are

not, will not result in morally significant harm (e.g. think of potential

medical breakthroughs via “painful” animal experimentation or the health

benefits of a diet that includes animal protein). I do believe the wager

argument has strength to fight back against such criticisms but I don’t think

it will come away unscathed. In the near future I’ll look at the argument in a

little more detail and start examining these criticisms.